Artificial Intelligence has undergone a renaissance in the past few years, with Large Language Models (LLMs) playing a starring role in everything from chatbots to automated translation systems. Yet, for many businesses, this technology has seemed out of reach—implying a need for vast data centers, specialised hardware, and multi-million-dollar budgets. That changed with DeepSeek R1, a groundbreaking open-source large language model that redefines the conversation around what is possible with limited resources.

At first glance, hearing about “large language models” may trigger images of massive server farms run by tech giants. But DeepSeek R1’s story flips this narrative, proving that with a healthy dose of innovation, resourcefulness, and community-driven collaboration, you can still achieve incredible results without needing a Fortune 500 budget. This blog post dives into exactly how DeepSeek R1 came to life, how it is open-sourced to spark innovation across diverse industries, and why all of this matters for entrepreneurs, developers, and researchers who might be on the fence about adopting AI technology.

Key Highlights

In this post, we will explore:

- What DeepSeek R1 is, and why it is different.

- How the team behind it innovated with limited hardware and capital.

- The open-source philosophy that makes DeepSeek R1 a game-changer.

- Potential applications spanning multiple industries.

- Why you do not need billions to harness these powerful tools for your own projects.

Whether you are building an AI-driven chatbot for customer service, analyzing massive data sets for pharmaceutical research, or brainstorming a next-gen content creation platform, DeepSeek R1’s open-source ecosystem can provide a solid foundation—no million-dollar hardware cluster required.

Topic Breakdown

What Is DeepSeek R1?

DeepSeek R1 is a Large Language Model (LLM) developed with a clear mission: to democratise advanced AI capabilities. It offers many of the advanced features found in big-budget AI projects—complex language understanding, reasoning skills, context awareness, and robust generation of text. However, what sets DeepSeek R1 apart is how it was built:

- Sparse Mixture-of-Experts (MoE) Architecture

This approach allows different “experts” (sub-networks) within the model to specialize in specific topics or functions. Instead of lighting up an entire massive network each time, only a limited subset of the parameters is used for any given task. This design can greatly reduce both training and inference costs. - Optimized Resource Utilization

DeepSeek R1 focuses heavily on software-based optimizations. The creators used multi-token training objectives, advanced caching strategies, and quantisation (reducing the precision of certain calculations). All these steps cut down the GPU memory needs and computation overhead. - Emphasis on Open Source

Right from the start, DeepSeek’s philosophy was that the broadest community possible should be able to use the model, contribute improvements, and create entire businesses around it. This has spawned a community of tinkerers, app developers, researchers, and domain experts—from healthcare to finance—who integrate DeepSeek R1’s technology into their specialized fields.

At its core, DeepSeek R1 demonstrates that top-tier performance can be achieved even outside the realm of giant data centers. By open-sourcing it, the team has empowered a global audience to adapt and refine the model for practically any need.

Innovating with Limited Resources

The biggest paradigm shift here is that we typically hear of big AI labs spending hundreds of millions of dollars on massive GPU clusters to train their LLMs. DeepSeek R1’s story is proof that resource constraints can spark rather than stifle innovation. Below are a few ways they pulled off such a feat:

1. Smart Architectural Choices

DeepSeek R1’s architects recognized that, for most tasks, the entire model does not need to be “awake” at once. Hence, they leaned on a Mixture-of-Experts approach, which routes different inputs to specialized sub-models. By doing so, they spared themselves from having to power a monolithic structure with billions of parameters on every single token. This drastically cuts down on compute overhead.

2. Low-Precision Training (FP8/BF16)

Traditional 32-bit floating point computations are costly. DeepSeek R1 introduced a fine-grained usage of 8-bit or 16-bit floating point numbers wherever possible. Reduced precision training can halve or quarter the memory usage—and with the right calibration and error-checking, you do not lose meaningful accuracy. This step alone saves millions in potential GPU hours.

3. Pipeline Parallelism & Communication Efficiencies

Training a large model across multiple GPUs or nodes is rarely straightforward. The DeepSeek R1 team refined pipeline parallelism, ensuring minimal idle times and maximum overlap between forward and backward passes of training. They also implemented advanced “all-to-all” communication protocols to route data efficiently and not waste resources on networking bottlenecks.

4. Open Collaboration and Feedback

By opening up early alpha versions to a community of testers, DeepSeek R1 leveraged crowd-sourced bug reports, performance suggestions, and data contributions. In short, they built an ecosystem around the model, which further accelerated improvements without incurring the cost of a massive internal staff. This open feedback loop let them iterate swiftly, fix inefficiencies, and incorporate user-driven features.

5. Targeted Hardware Partnerships

While they did not have infinite budgets, they partnered with smaller cloud providers and hardware vendors open to cutting-edge experiments. These providers offered flexible GPU time or special deals in return for publicity or data from AI benchmarking. Strategic partnerships like these extend the “budget runway” and ensure the model can be trained on enough data to remain competitive.

The Power of Being Open Source

One of the greatest achievements of DeepSeek R1 is the team’s decision to open-source it under a permissive license. This has several direct consequences for the AI community—and beyond:

- Rapid Innovation

Because anyone can inspect, modify, and integrate the code base, improvements happen at lightning speed. People fix bugs, add new training strategies, localize the model to new languages, or adapt it for specialized domains. - Lower Barriers to Entry

Startups and even independent developers can build powerful AI-driven solutions without worrying about licensing fees or multi-million-dollar hardware bills. The model runs on smaller GPU clusters or even commodity hardware with some optimisations. - Application Diversity

By placing the technology in the hands of many, creative uses abound. We see next-level chatbots, voice assistants, automated coding tools, research assistants, digital therapy aids, and beyond. Each specialized use case can further refine how the model is adapted. - Community Ecosystem

From Slack channels to GitHub repos, a vibrant open-source community forms around DeepSeek R1. This community fosters knowledge-sharing, meets regularly at virtual events, and can become an invaluable source of talent for any AI-related venture.

Breaking the Myth: You Don’t Need Billions to Build AI

For a long time, a major roadblock to building an AI startup was the assumption that you needed incredible financial backing to get anywhere. DeepSeek R1’s success story challenges that notion.

- Reduced Hardware Requirements:

Sparse computations, quantisation, and multi-token training strategies allow the model to run on smaller GPU clusters. This alone lops off a huge chunk of the typical AI budget. - Shared Knowledge & Codebase:

The open-source ecosystem provides code, best practices, and even pre-built solutions. You do not need to create every wheel from scratch. Want to integrate DeepSeek R1 as a customer service chatbot? Community-driven libraries and sample code can compress development times from months to weeks or days. - Proof of Viability:

If a startup founder can point to an open-source model like DeepSeek R1 to show that they do not need $100 million in capital for data center expenses, fundraising discussions become more flexible. Investors see that open-source LLMs have proven track records, letting them back smaller AI projects with more confidence.

Real-World Applications Across Industries

1. Healthcare

Hospitals or research labs can tune DeepSeek R1 on medical data for tasks like summarizing patient records, scanning medical literature, or generating case study overviews. Low resource usage means smaller clinics might also benefit, not just major hospital systems.

2. Finance

Financial advisors, trading startups, or compliance teams often need to parse thick volumes of text—market data, regulations, risk assessments. DeepSeek R1 can be customized for domain-specific jargon. Plus, in-house servers (instead of giant data centers) can suffice, maintaining data privacy more easily.

3. Education

From automated grading to adaptive learning platforms, DeepSeek R1 can power novel educational tools. Small EdTech companies no longer need top-tier GPU racks to build intelligent tutoring systems. They can also ensure consistent and tailored teaching content for students around the globe.

4. Retail & eCommerce

Chatbots, product recommendation engines, automated product description generators—these are all prime use cases. Imagine a niche e-commerce store using DeepSeek R1 to handle customer support queries 24/7, in multiple languages, with minimal overhead.

5. Software Development

Ironically, AI can help build better software. DeepSeek R1’s advanced code understanding or generation capabilities reduce the grunt work for developers. Startups can integrate it into IDEs or use it to automatically generate test cases or documentation. By open-sourcing DeepSeek R1, the entire software development pipeline can be accelerated.

6. Marketing & Advertising

Personalized email campaigns, social media content generation, or even brand strategy outlines can all be partially automated using an LLM. Smaller marketing agencies might not have had the capital to license enterprise AI solutions, but with an open-source model like DeepSeek R1, they can affordably deploy advanced copywriting solutions.

The Future of AI Belongs to the Builders

We live in an era when advanced AI technology is no longer the exclusive domain of multinational corporations. DeepSeek R1 stands at the forefront of a wave of open-source LLMs that are scaling new heights of intelligence while keeping budgets low. Innovation thrives under constraints, and the DeepSeek R1 team’s resourceful approach is a blueprint for others.

- Collaborative Evolution: Expect new “versions” of DeepSeek R1 or specialized spin-offs to appear with domain-specific improvements—from legal to tourism to engineering.

- Broader Community Support: More AI enthusiasts will join the conversation, refine the tools, and push the boundaries.

- Ethical and Responsible AI: Open-source approaches also encourage transparency. Everyone has the opportunity to peek inside, evaluate biases, and propose solutions.

FAQs About DeepSeek R1

What is DeepSeek’s R1 model?

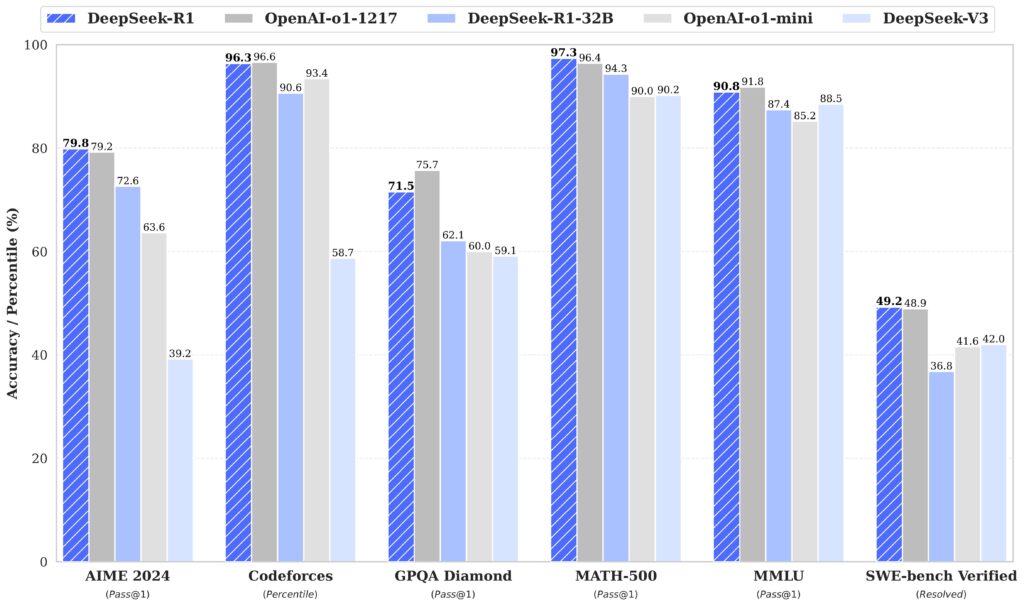

DeepSeek’s R1 is an open-source AI model that matches the performance of leading AI models but is developed at a fraction of the cost. It excels in tasks such as mathematics, coding, and natural language reasoning.

How does DeepSeek R1 differ from other AI models?

Unlike many AI models that require substantial computing resources, DeepSeek R1 achieves similar performance using fewer chips and innovative training methods. This approach makes it more cost-effective and accessible.

Is DeepSeek R1 open source?

Yes, DeepSeek R1 is open-source, allowing developers worldwide to access, modify, and implement it in various applications.

What impact has DeepSeek R1 had on the tech industry?

The release of DeepSeek R1 has caused significant disruptions in the tech industry, leading to substantial declines in the stock values of major tech companies. Its cost-effective approach has prompted a reevaluation of investments in AI infrastructure.

Are there any concerns associated with DeepSeek R1?

While DeepSeek R1 is praised for its efficiency, there are concerns regarding data privacy and potential censorship, especially given its alignment with Chinese regulations. Users should be aware of these factors when considering its implementation.

Conclusion

DeepSeek R1 is a resounding statement that you can achieve big wins without big pockets. By adopting a Sparse Mixture-of-Experts design, leveraging low-precision training, smart parallelism, and strong community collaboration, they managed to build a powerful LLM on a tight budget. Even more significant is their decision to open-source the model, creating a global playground for innovation—from code generation in software development, to advanced text analysis in finance, to data-driven insights in healthcare, and so much more.

This is a clarion call for entrepreneurs, developers, researchers, and anyone curious about artificial intelligence: You no longer need millions or billions to build something extraordinary. By starting with DeepSeek R1, you can develop AI applications that solve real problems in your industry. Access, refine, and deploy the model on smaller GPU clusters or partner with modest cloud providers, all while benefiting from a thriving open-source community.

In short, if you’ve been dreaming of building that AI-infused business or unleashing a new wave of productivity in your existing enterprise, let DeepSeek R1 be your launching pad. The time to seize the future of AI is now—without draining your entire budget. When you adopt open-source solutions like DeepSeek R1, you join a growing network of pioneers who believe in accessible, responsible, and cutting-edge AI for all.

Embrace the journey, explore the code, and join a creative global community. With DeepSeek R1, the AI frontier is yours to shape.

Want to learn more about DeepSeek R1?