If you’ve been evaluating agentic AI beyond demo-ware, The Google Cloud Startup Technical Guide: AI Agents is one of the clearest roadmaps for going from prototype to production. It breaks the problem into three big chunks: understand the agent ecosystem, build the agent, and make it reliable and responsible in production—with concrete tools for each step.

This article translates that guidance into an operational playbook you can apply immediately—no marketing gloss, just the parts founders and CTOs need: which Google Cloud components matter (and why), how ADK and Vertex AI Agent Engine fit, when to lean on MCP and A2A for interoperability, what “grounding” actually looks like in practice, and how AgentOps turns experiments into dependable software products. We’ll also show where Agentspace fits once more teams want in.

Topic Breakdown

1) The Google Cloud Agent Ecosystem in One View

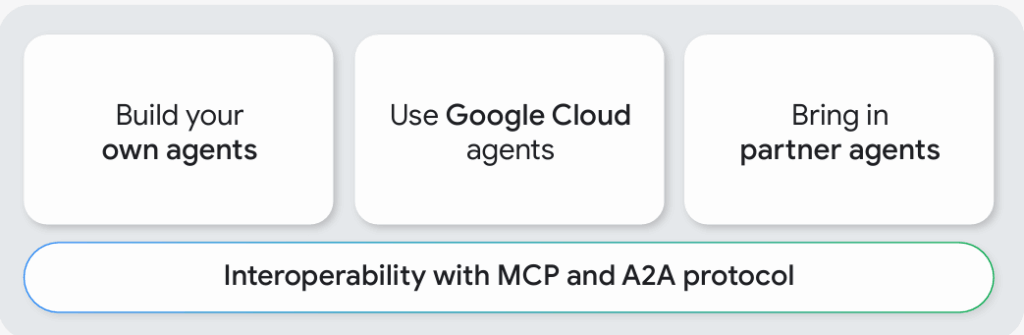

Google Cloud frames three complementary paths: build your own agents, use Google Cloud agents, and bring partner agents, all underpinned by two interoperability pillars—Model Context Protocol (MCP) and Agent2Agent (A2A)—so agents can discover tools, talk to each other, and coordinate across teams and vendors.

“The agentive workflow is the next frontier… give AI a complex goal and have it orchestrate the multi-step tasks needed to achieve it.” — Thomas Kurian, CEO Google Cloud.

Why this matters

- Avoid the monolith: using MCP/A2A lets you compose specialised agents and tools instead of building a fragile, all-in-one bot.

- Choice of build path: code-first with Agent Development Kit (ADK), application-first with Agentspace, or managed Google agents where appropriate.

2) Core Concepts: Models, Tools, Orchestration, Runtime

The guide identifies four moving parts you must design on day one:

- Model (reasoning): pick the lightest model that meets quality for each task; reserve larger models for hard problems.

- Tools (actions): well-typed function interfaces so the model can decide what to call and how.

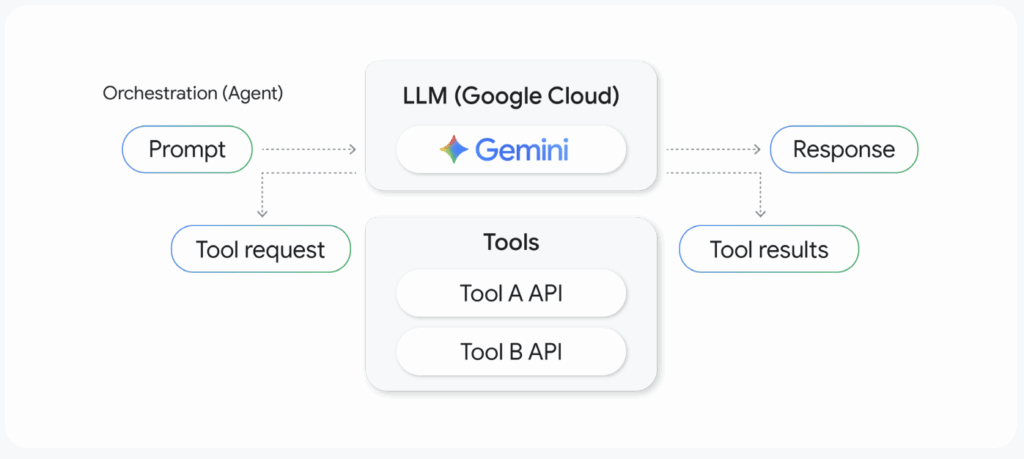

- Orchestration (executive function): the control loop that plans, calls tools, observes results, and repeats—often the ReAct pattern.

- Runtime: a platform that scales, secures, observes, and integrates.

ReAct in practice. Orchestration uses a cycle of Reason → Act (tool) → Observe; the guide’s diagram shows prompts flowing through Gemini, selecting Tool A/B, then producing a response once the goal is satisfied. This isn’t a chat; it’s a goal-seeking control loop.

3) Build Path #1: Code-first with ADK

If you need control and composability, ADK (Agent Development Kit) is designed for you. Highlights from the guide:

- Orchestration logic: implement ReAct and workflow logic for multi-step tasks.

- Tool definition & registration: clear signatures and docstrings so the model can choose the right tool.

- Context & memory: recall user preferences and conversation history safely.

- Evaluation & observability: test trajectories and inspect reasoning steps.

- Containerisation: agents become FastAPI web services you can deploy anywhere.

- Multi-agent composition: coordinate specialised agents where it helps.

Where it deploys. ADK agents are containerised and deploy cleanly to Vertex AI Agent Engine, Cloud Run, or GKE—the docs call out all three as first-class.

4) Build Path #2: Application-first with Agentspace

When non-engineering teams want to build and orchestrate agents across company data, Agentspace is the application-first path: unified search across SaaS apps, multimodal synthesis, a no-code Agent Designer, and a pre-built agent library. It lets you scale usage without bottlenecking engineering.

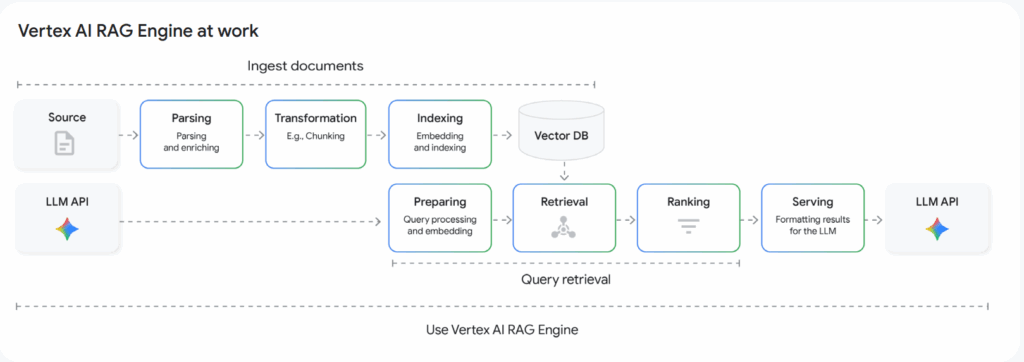

5) Grounding: From RAG to Agentic RAG (and Memory Bank)

Grounding reduces hallucinations by retrieving relevant context before the model reasons. The guide recommends starting with RAG, moving through GraphRAG when relationships matter, and graduating to Agentic RAG for multi-step, tool-driven search and verification—an essential ingredient for agents that work rather than just talk.

For persistent personalisation, Vertex AI Memory Bank (preview) distils long conversation histories into compact facts your agent can recall, instead of repeatedly re-ingesting raw transcripts (GenerateMemories / CreateMemory). It’s designed to run on Vertex AI Agent Engine, with clear API hooks.

RELATED READING: A Practical Guide to Building Agents: Deep Dive Into OpenAI’s Best Practices

6) Interoperability: MCP (tools/data) and A2A (agent-to-agent)

MCP standardises how apps expose tools and data so your agent can discover and call them; A2A standardises how agents discover each other and exchange tasks. In the guide, these two threads recur as the glue that turns siloed automations into a collaborative workforce.

- MCP benefit: write a tool once, make it discoverable by any MCP-aware agent or IDE.

- A2A benefit: publish an agent card (JSON), accept requests from other agents, and collaborate securely across teams and vendors.

Real-world angle: the Zoom customer story in the guide shows A2A-enabled scheduling across Gmail and Calendar—reducing manual back-and-forth.

7) Runtime Options and When To Choose Each

The guide’s recommendation is pragmatic: pick the deployment surface that matches your maturity and ops constraints.

- Vertex AI Agent Engine — the fastest path to production for ADK-built agents; auto-scaling, identity & access, lifecycle APIs, and agent-specific features like Memory Bank and Example Store. Ideal for small teams who want to ship quickly.

- Cloud Run — serverless containers with fast cold starts and simple CI/CD; great when you already operate microservices on GCP and want to drop agents into the same pattern.

- GKE — choose Kubernetes when you need deep network/stateful control, GPUs/TPUs, or platform parity with existing services.

The guide includes a deployment diagram (BaseAgent → Container → Agent Engine / Cloud Run / Custom infra) and explains how adk api_server wraps your agent for production.

8) From Prototype To Production: AgentOps

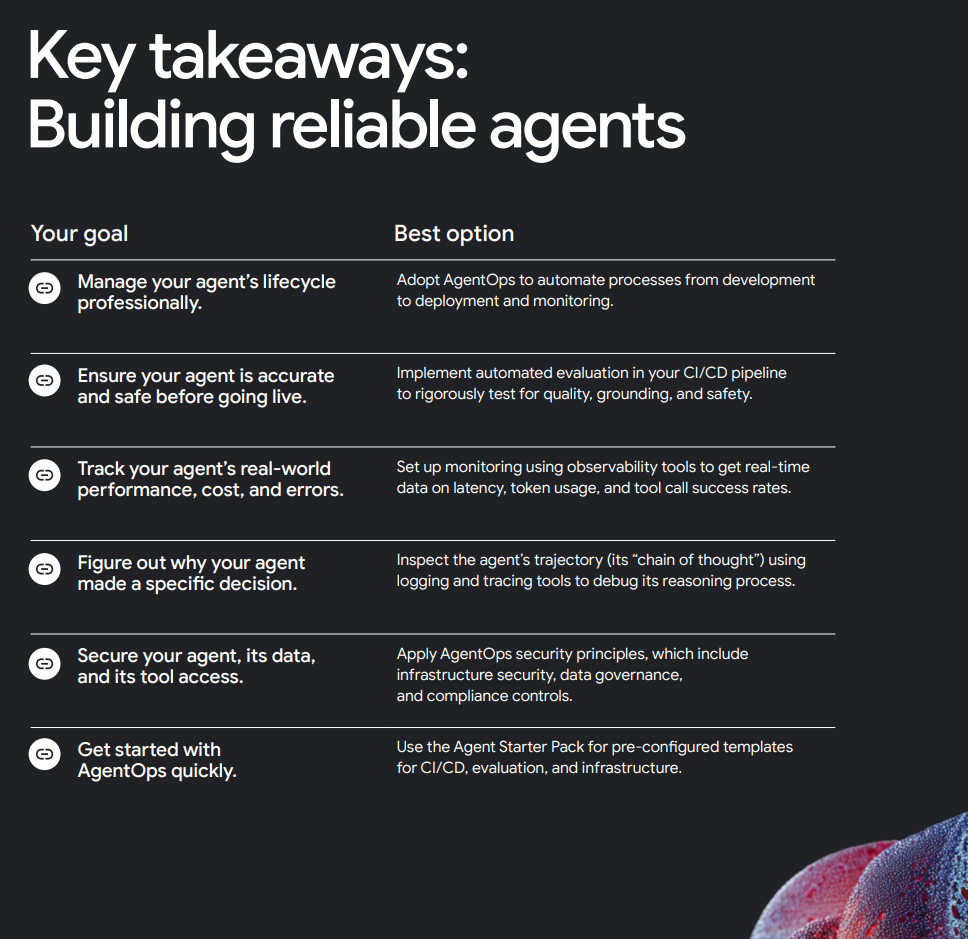

A standout section is AgentOps—a discipline for treating agents like real software products: you define guardrails, evaluate trajectories, and ship via CI/CD, not vibes. The “Key takeaways: Building reliable agents” page distils it into five founder-friendly goals: lifecycle management, pre-launch accuracy/safety evals, real-world performance tracking, explainability of decisions, and security/governance—plus a starter pack to get going quickly.

AgentOps essentials from the guide (adapted as a checklist):

- Security by default: least-privilege IAM for tools/data, encrypted transport and storage, and auditable logs.

- Guardrails at two levels: prompt/output validation in-app; security testing in CI/CD.

- Continuous evals: programmatic tests of both trajectory (step-by-step tool use) and final outputs (accuracy, helpfulness, grounding).

- Observability: latency, token usage, tool-call success, and failure taxonomies.

- Change management: version agents and prompts; gate releases with automated quality bars.

9) A Step-by-step Path To Your First Production Agent (30–60 days)

Use the guide’s structure to keep scope tight and momentum high:

Week 1 — Frame the thin slice

- Choose one workflow with clear business value (refund triage, lead enrichment, incident triage).

- Capture inputs, tools, data sources, and the definition of done for the agent.

Week 2 — Build the smallest viable agent with ADK

- Start with one or two well-typed tools; write crisp docstrings and parameter schemas.

- Implement ReAct; keep prompts short and reference schema/constraints rather than prose.

- Containerise with adk api_server; run locally.

Week 3 — Grounding & memory

- Add RAG over the documents that matter; log citations and relevance for each answer.

- If personalisation is critical, test Memory Bank for distilled, privacy-respecting recall.

Week 4 — AgentOps gates & deploy

- Add trajectory evals, output evals, and safety checks to CI.

- Ship to Vertex AI Agent Engine (or Cloud Run if that matches today’s platform).

- Instrument logs/metrics; expose an internal preview to real users.

Week 5–8 — Parallelise and interoperate

- Where tasks are independent, introduce Parallel workflows for latency wins.

- For strict order, wrap with Sequential; for iterative refinement, use Loop.

- Publish an agent card; enable A2A collaboration with partner agents.

10) Data Layers: Working Memory, Long-Term Knowledge, System of Record

The guide repeatedly separates short-term working memory, long-term knowledge, and transactional state, then maps each to services that suit the job. A common pattern is:

- Working memory via cache (e.g., Vertex AI Agent Engine features or Memorystore patterns),

- Long-term via Vertex AI Search, BigQuery and Cloud Storage,

- Transactional via Cloud SQL or Spanner for the system of record.

It’s a practical reminder: don’t cram everything into a vector store; choose storage for consistency, latency, auditability, or semantic recall as needed.

11) Real-world Signal: Zoom’s A2A Scheduling Story

A concise example from the guide: Zoom’s AI Companion integrates with Agentspace and A2A to automatically schedule meetings from Gmail context, update Google Calendar, and keep participants informed—reducing cross-platform friction and setting the stage for richer multi-agent workflows.

12) Risks and Responsibilities To Bake In From Day One

- Accuracy & grounding: embed RAG, require citations, and run output evals before release.

- Explainability: log the agent’s trajectory so you can inspect “why” a tool was called.

- Privacy & access: narrow the agent’s attack surface via least-privilege IAM across tools/data.

- Change control: version prompts and tools; promote through environments with quality gates. These are spelled out in the guide’s reliability key takeaways.

13) When To Move To Multi-agent

Start with a single agent to avoid premature complexity. Shift to multi-agent when you have:

- Distinct, independently solvable sub-tasks (good for Parallel).

- Strictly ordered sub-steps (fit for Sequential).

- Iterative “generate-test-fix” loops (use Loop).

At organisational boundaries—or when collaborating with vendors—publish an A2A agent card and hand off work over A2A rather than inventing one-off APIs.

14) The Founder’s Cheat Sheet

- Scope: one workflow, one agent, two tools, one runtime.

- Ground: RAG from day one; don’t trust free-text alone.

- Ship: containerise; deploy to Agent Engine; add evals to CI.

- Scale: Parallel/Sequential/Loop; publish A2A; embrace MCP tools; move to Agentspace as demand spreads.

The Google Cloud Startup Technical Guide: AI Agents—A Flexible Path To Production

Google Cloud’s technical guide provides an unusually opinionated—but flexible—path to production: ADK for code-first agents, Vertex AI Agent Engine for a fast, secure runtime, MCP and A2A for open interoperability, rigorous AgentOps to ensure reliability, and Agentspace to scale adoption beyond engineering. Treat agents as software products, not demos: ground them, evaluate them, observe them, and deploy them as first-class services. That’s how you move from promising prototypes to durable, defensible advantages this quarter—not next year.

External Resources

- Google Cloud ADK docs — https://google.github.io/adk-docs/

- Agentspace — https://cloud.google.com/products/agentspace

- Model Context Protocol (MCP) — https://googleapis.github.io/genai-toolbox/getting-started/mcp_quickstart/

- Agent2Agent (A2A) — https://a2a-protocol.org/latest/

- Vertex AI RAG overview — https://cloud.google.com/vertex-ai/generative-ai/docs/rag-engine/rag-overview

Looking to start your next Google Cloud AI project?