Large language models (LLMs) have evolved far beyond single-turn chat interfaces, powering autonomous agents that can manage end-to-end workflows. OpenAI’s “A Practical Guide to Building Agents” condenses lessons from real-world deployments into a step-by-step manual for product and engineering teams. This deep dive expands on each section, covering everything from identifying high-impact use cases through to robust guardrails and monitoring, so you can confidently ship production-grade agents.

Topic Breakdown

Agents Defined: Beyond Chatbots

An agent is a system that autonomously accomplishes multi-step workflows on a user’s behalf, complete with decision-making, tool invocation, and failure recovery. Key characteristics:

- LLM-Driven Control Loop

- The agent leverages an LLM to interpret tasks, select tools, and decide when a workflow is complete or requires fallback.

- Dynamic Tool Selection

- From APIs to UI scrapers, the agent picks the right tool at each step, constrained by predefined guardrails.

- High Independence

- Unlike simple chatbots, agents proactively correct errors or escalate to users when uncertain.

Workflow here means any structured sequence—booking a table, processing an insurance claim, or committing code changes—where the agent must execute multiple steps reliably.

Choosing When to Build an Agent

Agents excel in scenarios where traditional rule-based automation fails. Look for environments that feature:

- Complex Decision-Making

e.g. Refund approvals with nuanced exception handling. - Hard-to-Maintain Rulesets

e.g. Vendor security reviews where dozens of brittle if-then rules exist. - Unstructured Data Reliance

e.g. Parsing free-form text claims from users.

Before diving in, validate that your target workflow truly requires agent flexibility. If it’s a straightforward pipeline, a deterministic script may suffice.

Design Foundations for Building Agents

Every agent is built on three pillars:

- Model

- The LLM that reasons over context and instructs next steps.

- Tools

- External functions or APIs (data retrieval, action execution, or even other agents).

- Instructions

- Precise prompts that guide agent behaviour, mapping each step to a tool or interaction.

Selecting Your Models

Models come with trade-offs in capability, latency, and cost. Best practice:

- Baseline with Top-Tier Models

Prototype using the most capable LLM to ensure accuracy targets. - Iteratively Optimise

Swap in smaller models for simpler sub-tasks (e.g. retrieval) to reduce cost. - Automated Evals

Use OpenAI’s evals framework to benchmark each model against your workflow metrics.

Defining Tools

Tools extend agents into the real world:

- Data Tools

e.g., Database queries, document readers, web search. - Action Tools

e.g,. CRM updates, email sending, ticket hand-offs. - Agent Tools

Specialist agents invoked via Manager patterns.

Each tool should have a clear, versioned interface and documentation to boost discoverability and reusability.

Crafting Unambiguous Instructions

The quality of instructions directly impacts agent reliability. Follow these guidelines:

- Leverage Existing SOPs & KBs

Convert policy articles into prompt steps. - Break Tasks into Clear Steps

Map each step to a tool call or explicit action. - Anticipate Edge Cases

Define branches for missing data or unexpected inputs. - Auto-Generate with LLMs

Use a high-capability model to transform documents into numbered instructions, e.g., “Convert this help-centre article into numbered, unambiguous agent instructions.”

Orchestration Patterns: From Single to Multi-Agent

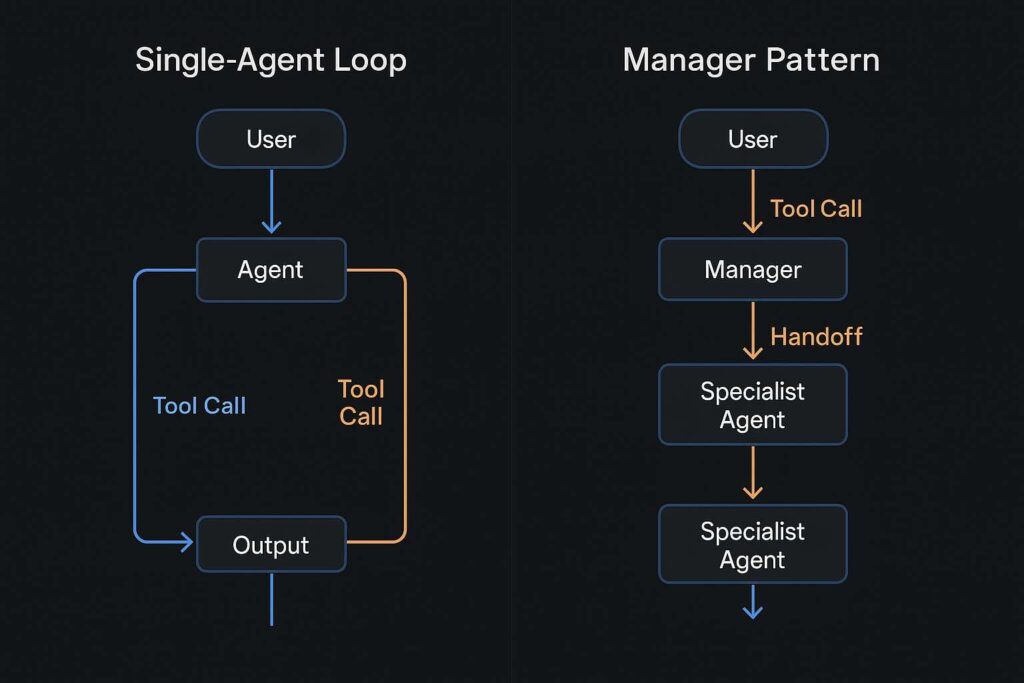

Single-Agent Loop

Start simple. Equip one agent with all necessary tools and loop until an exit condition cdn.openai.com:

from openai_agents import Agent, Runner

agent = Agent(

name="Weather Agent",

instructions="You can fetch weather data and answer user queries.",

tools=[get_weather_tool],

)

# Run the agent until it returns a final output

Runner.run(agent, [UserMessage("What’s the forecast for tomorrow?")])

Exit conditions include:

- Invocation of a final-output tool.

- LLM response without any tool calls.

- Error thresholds or turn limits.

Use prompt templates with variables (e.g. {{user_name}}, {{context}}) to avoid duplicating full prompts for each scenario.

Multi-Agent Systems

As complexity grows, split responsibilities across agents:

Manager Pattern

A central “manager” agent acts like a conductor, delegating tasks to specialised agents via tool calls:

Manager ──calls──> SpanishAgent

└─calls──> FrenchAgent

└─calls──> ItalianAgent

Example:

manager_agent = Agent(

name="Translation Manager",

instructions="Delegate translations to appropriate agents.",

tools=[

spanish_agent.as_tool("translate_to_spanish", "Spanish translation"),

french_agent.as_tool("translate_to_french", "French translation"),

],

)

Decentralised Pattern

Peer agents hand off control directly:

User → TriageAgent ─handoff→ OrderAgent

OrderAgent returns final response

Implement a handoff function so agents can transfer state:

def handoff_to_order_agent(context):

return order_agent(context)

Use decentralised when no single “manager” is required, and you prefer domain-specialist agents to take over fully.

Guardrails: Safety & Predictability

Robust guardrails are non-negotiable in production:

- Input Validation

- Reject or sanitise malformed user inputs.

- Tool Usage Limits

- Restrict tools per agent or per workflow stage.

- Output Constraints

- Enforce JSON schemas or token limits to ensure valid responses.

- Error Handling

- Define fallbacks: retry logic, user escalation, or safe exits.

Layer guardrails:

- LLM-Based checks (e.g., hallucination detection).

- Rules-Based (regex, keyword filters).

- API-Based (OpenAI Moderation API).

Monitoring, Evaluation, and Iteration

Continuous improvement separates successful agents from brittle ones:

- Automated Evals

Run end-to-end tests on live workflows to detect regressions. - User Feedback Loops

Collect satisfaction scores and refine instructions. - Performance Dashboards

Track latency, error rates, and cost per invocation to guide optimisation.

Deployment Considerations

- Authentication & AuthZ

Gate agents behind secure IAM and role-based access. - Rate Limiting & Quotas

Prevent runaway costs and maintain service reliability. - Versioning

Tag agent versions alongside tool interfaces for safe rollbacks.

Conclusion

OpenAI’s “A Practical Guide to Building Agents” provides a clear blueprint—from use-case selection to design, orchestration, guardrails, and monitoring. By adhering to these best practices, you can transform LLMs into autonomous agents that reliably execute workflows at scale while maintaining safety, predictability, and cost-effectiveness.

Thinking of building agents for your business?